Exposing Darcula: a rare look behind the scenes of a global Phishing-as-a-Service operation

Written by:

Read part 1 of the news story about these findings at NRK.no

Read part 2 of the news story about these findings at NRK.no

Research into a global phishing-as-a-service operation will take you through:

- Hundreds of thousands of victims spanning the globe

- A glimpse into the lifestyle of the operators

- Technical insight into the phishing toolkit

- The backend of a phishing threat actor operating at scale

The scam industry has seen explosive growth over the past several years. The types of scams and methods used are constantly evolving as scammers adapt their techniques to continue their activities. They often capitalise on new technologies and target areas where our societies have yet to build mechanisms to protect themselves.

This story begins in December 2023 when people all over the world – including a large portion of the Norwegian population - started to receive text messages about packages waiting for them at the post office. The messages would come in the form of an SMS, iMessage or RCS message. What we were witnessing was the rise of a scam technique known as smishing or SMS phishing.

Such messages have one thing in common: they impersonate a brand that we trust to create a credible context for soliciting some kind of personal information, thus tricking us into willfully giving away our information.

Some scams are easier to spot than others. Spelling errors, poor translations, strange numbers or links to sketchy domains often give them away. But even tell-tale signs can be easy to miss on a busy day. When a large number of people are targeted, some will be expecting a package. And the tactic is obviously working. If it wasn’t worth their while, the scammers wouldn’t have invested so much time, money and effort.

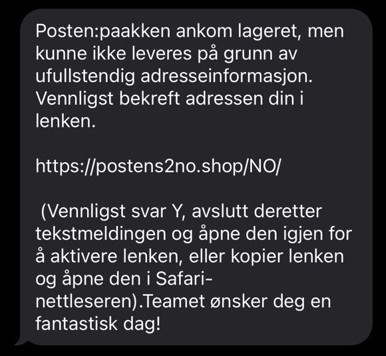

The photo above shows an actual message that we received. The sender claims to be the Norwegian Postal Service, informing us that a delivery is on hold until ‘missing’ address details are provided. If you enter the missing details, you are requested to pay a missing postage fee. Now while there are legitimate circumstances where individuals are requested to pay tolls when receiving packages internationally, this was not one of them.

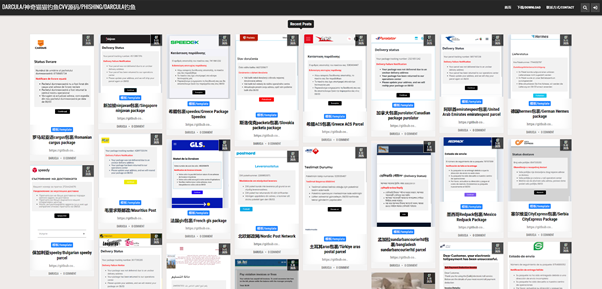

Some quick Googling hinted at the vast scale of the operation. We found an abundance of social media posts and news articles with screenshots of suspiciously similar messages, written in different languages targeting victims all over the world.

The general advice given by the police, targeted brands, banks and security community was simple: do not click the link, delete the message.

Many people in our industry are driven by a strong sense of curiosity, a need to look under the hood and understand how things actually work. It was probably this thirst for knowledge combined with our backgrounds in ethical hacking and application security that influenced our decision to go against all conventional advice: we decided to click the link.

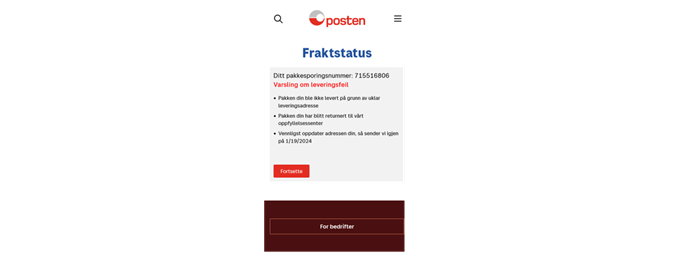

What we saw was more or less what we expected. On our mobile device, there was a page claiming to be the Postal Service, inviting us to click a button to continue and fill out our address information. After a few such pages, we were asked to pay a small fee, which confirmed the true purpose of the scam: to steal our credit card information.

Looking beneath the surface

Cellular Devices only

The phishing messages we received were sent to our mobile devices, and were expected to be opened on mobile devices. Since smartphones are not particularly suited to do forensics on, we needed to be able to look at the phishing page from an ordinary computer.

However, when investigating the software from a computer, we discovered several anti-forensics features implemented. The first features were implemented in an effort to ensure the links could only be visited by mobile devices on cellular networks.

Such features are often used so security solutions that do not meet these criteria would not be able to inspect the link for malicious content. In turn, this protects the messages from being flagged as phishing attempts and it protects the phishing sites and domains from being flagged as malicious and from subsequent takedowns.

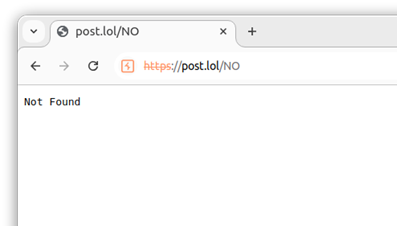

So, when browsing to the link on a computer, we were presented with a Not Found message.

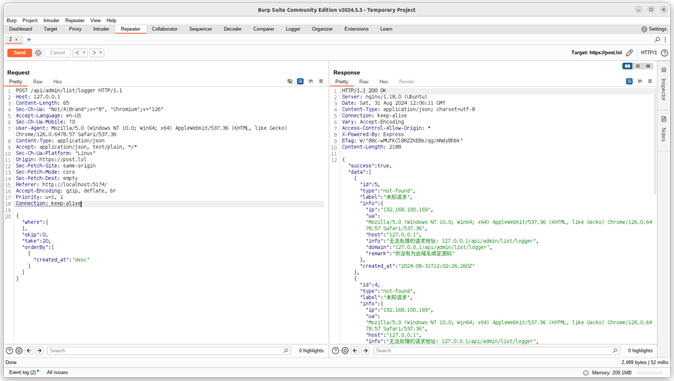

When looking at web applications, we use a tool called Burp. The tool creates a man-in-the-middle proxy that operates by sitting in between one’s web browser and a server, so that we’re able to see and modify traffic as it moves back and forth over the internet. To pretend we were opening the link using a mobile phone, we changed the User-Agent header to match that of our phone.

Instead of:

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36

We would identify ourselves as:

User-Agent: Mozilla/5.0 (iPhone; CPU iPhone OS 17_7_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/18.4 Mobile/15E148 Safari/604.1

However, changing the User-Agent header was not enough, we still got the “404 Not Found” error.

The only thing that was different from the request sent from our phone and the one from our computer was the IP address we were originating from. Presumably the phishing software was also configured to only present the content to requests originating from cellular networks. Using the phone as a WiFi-hotspot, we were finally able to load the phishing page on a computer where we had the proper tools to investigate the software further.

Message Encryption

Once we got things up and running, we could see that they were using a common messaging library called Socket.IO. We could also see the messages they were sending back and forth. Here is an example:

{

"msg": {

"type": "824d02e7cf6ec64a44710b06ef8cfa0e",

"data": "U2FsdGVkX19JWH3V+KnWIdPjzlF/wBhLj4W/rYovdJmHineTSuwIxfkSQyLycrSfTyBEAai8R6yrLQnIcd3FtjqDoXPyh9j28hXEyaYAjyo4ZuTx0jaaJE/CdV0F/[snipped]"

},

"user": [],

"room": [

"21232f297a57a5a743894a0e4a801fc3"

]

}

Judging by the character sets and lengths of the message elements, we suspected that “type” and “room” were probably an MD5 hash, and that “data” looked like a Base64 encoded string. More on room and type later.

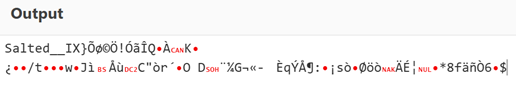

Decoding the data element yielded the string “Salted_” followed by an indecipherable text, which was a pretty good indication that there was some kind of encryption going on.

In the application security world, this is referred to as a client-side encryption. And more often than not, it’s an indication that somebody has done something fishy (pun intended).

In most circumstances it doesn’t make sense to encrypt messages like this because it requires the encryption keys to be stored on the client, and the network traffic between a client and server is already protected at the network layer by HTTPS. But in this case, the application is trying to protect itself from a different kind of party, namely our kind: security researchers with man-in-the-middle proxies. This additional encryption acts as an obfuscation layer to hide the inner workings of the phishing protocol. But as we were in control of the client, we were able to inspect the messages before being encrypted, which even enabled us to extract the encryption keys.

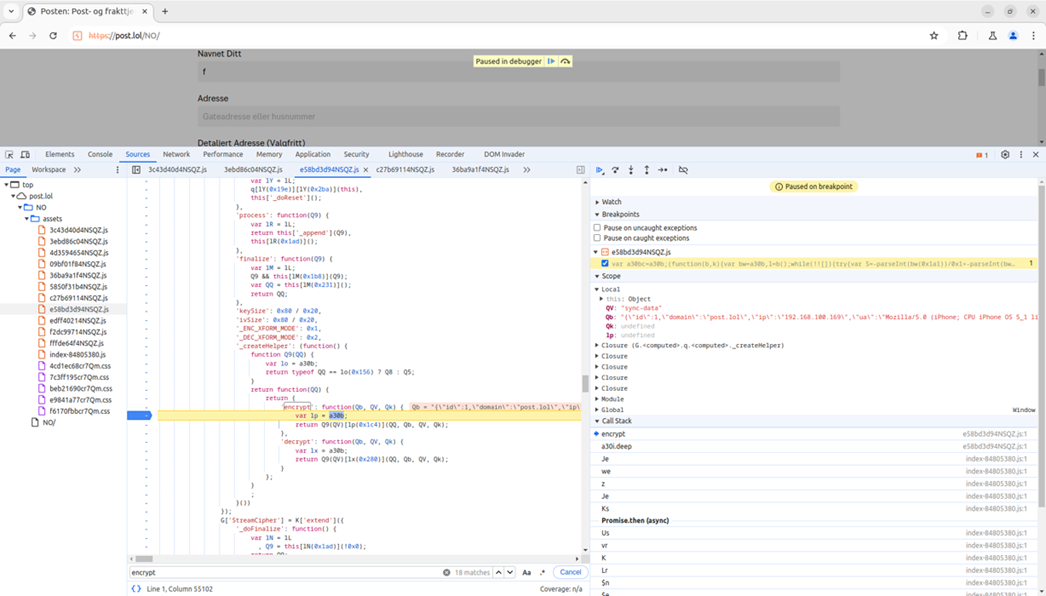

Curious as we were about the content of those messages, we started to poke around in the client-side JavaScript code. And sure enough, we soon stumbled upon some encrypt and decrypt functions, as well as the encryption keys they were using.

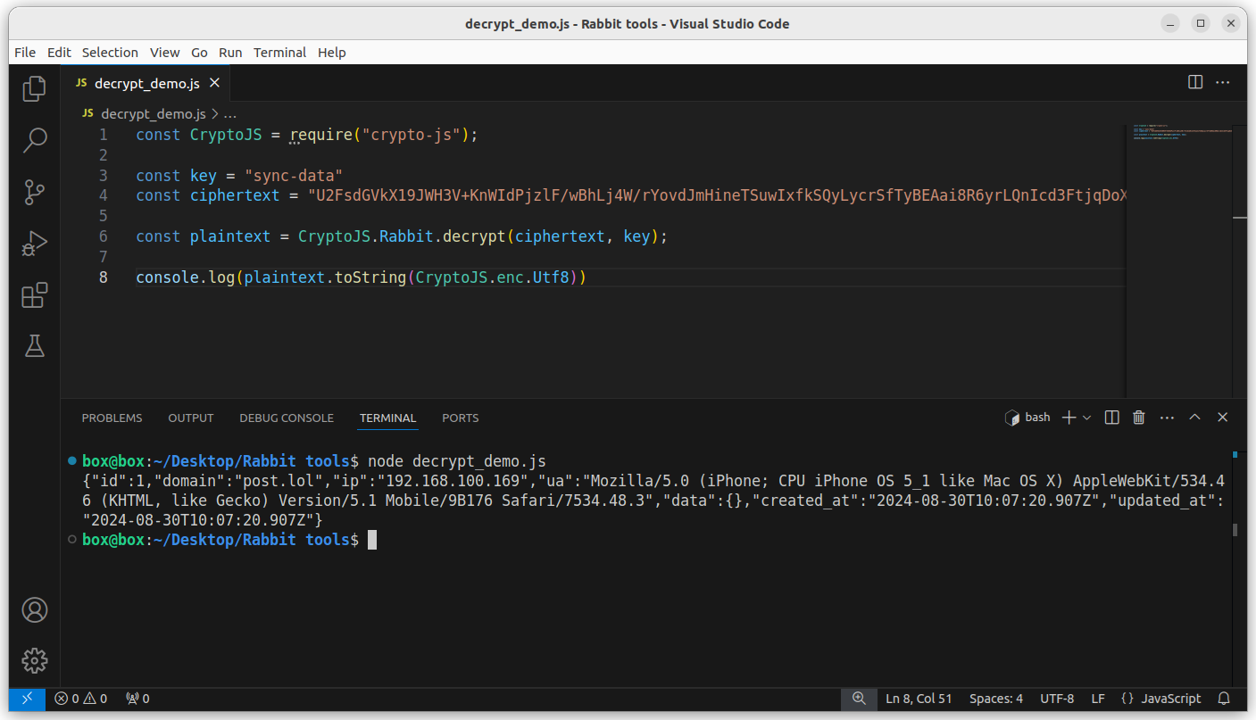

We could see they were using a Node.js library called crypto-js, and an algorithm called Rabbit to encrypt the “data” element in the messages. We then wrote a few quick lines of JavaScript that allowed us to decrypt the content of the messages.

After this quick test, we wrote some code that allowed us to see and modify the plaintext version of the messages in Burp as they were sent in real-time. The JavaScript code also revealed that the type and room parameters from earlier were the MD5 hashes of “sync-data” and “admin”. Now we could actually see what the application was doing, and edit messages if we wanted to. Below is an example of such a decrypted message.

{

"msg": {

"type": "sync-data",

"data": "{"id":1,"domain":"post.lol","ip":"192.168.100.169","ua":"Mozilla/5.0 (iPhone; CPU iPhone OS 5_1 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9B176 Safari/7534.48.3","data":{},"created_at":"2024-08-30T10:07:20.907Z","updated_at":"2024-08-30T10:07:20.907Z"}

},

"user": [],

"room": [

"admin"

]

}

It looked like they had implemented some kind of chatroom-like functionality here, as the browser was sending messages that contained victim data to a “room” called admin.

We assumed that different application components could join “rooms” to receive messages. For example, if the scammer had a dashboard with an overview of all the victims, maybe that dashboard was also inside the “admin” room.

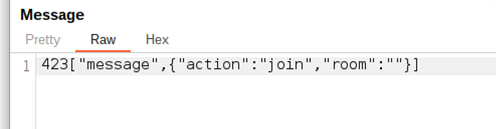

Digging a bit more into this “room” functionality, we eventually came across the message below. Whenever the phishing page was loaded, we would join a default room.

This message helped support our earlier assumption. Our browser, the “victim”, was joining an empty room, or a default room, but the data we typed in was being sent to an “admin” room.

Our next thought became our next move: What if we joined the admin room?

What we saw shocked us. Flying by our screen was a stream of names, addresses, and credit cards, a real-time feed of hundreds of victims being phished. Everything they typed in, character by character, we could see on our screen.

What had started as an interesting side project had turned into something serious.

Darcula Group

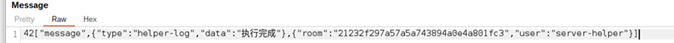

After the initial shock wore off, we realised that it wasn’t just victim data in the admin room. There were also some technical logs.

A message similar to the one above flew across the screen. The message was related to an error in a database component in which the name of the database appeared: Darcula.

At the time it didn’t mean much to us other than the fact that it was an interesting string, and kind of sounded like a username. Googling didn’t help much, as this was also the name of a popular colour scheme used in text editors, which overwhelmed the search results.

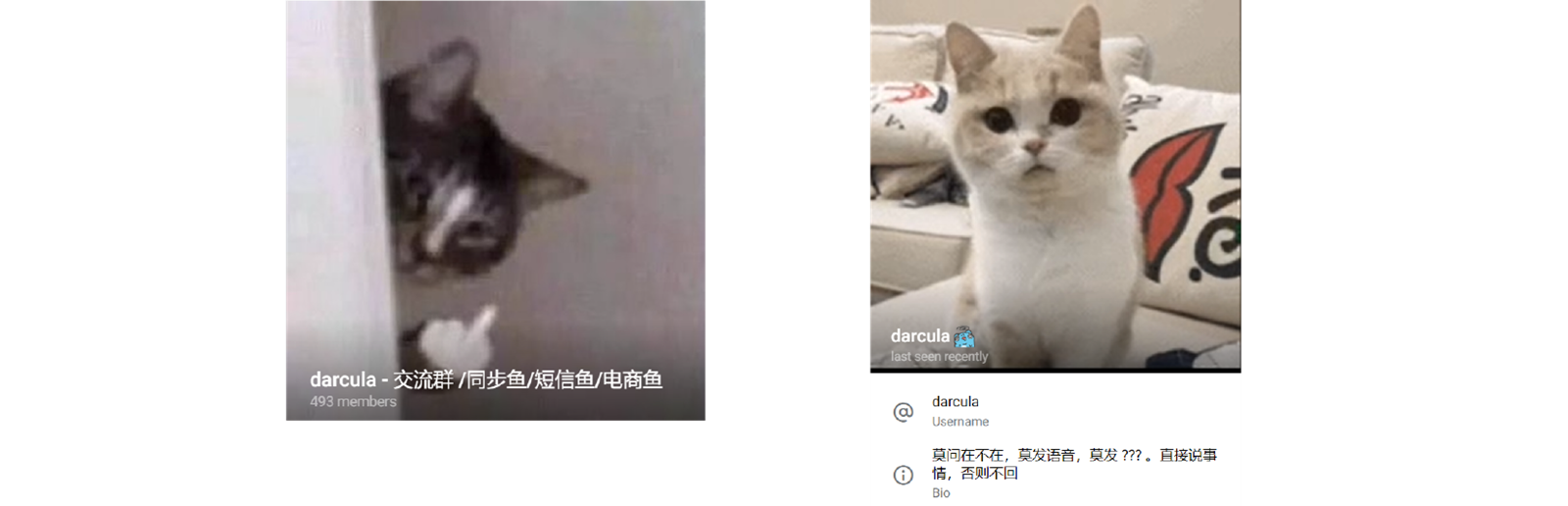

Around this time, the messaging service Telegram was being criticised for operating as a safe harbor for criminal networks. There we quickly found both a user and a Chinese language-based group called Darcula. Darcula was the administrator of this group.

After scrolling through the photos posted in the group, it became apparent that we were looking in the right place.

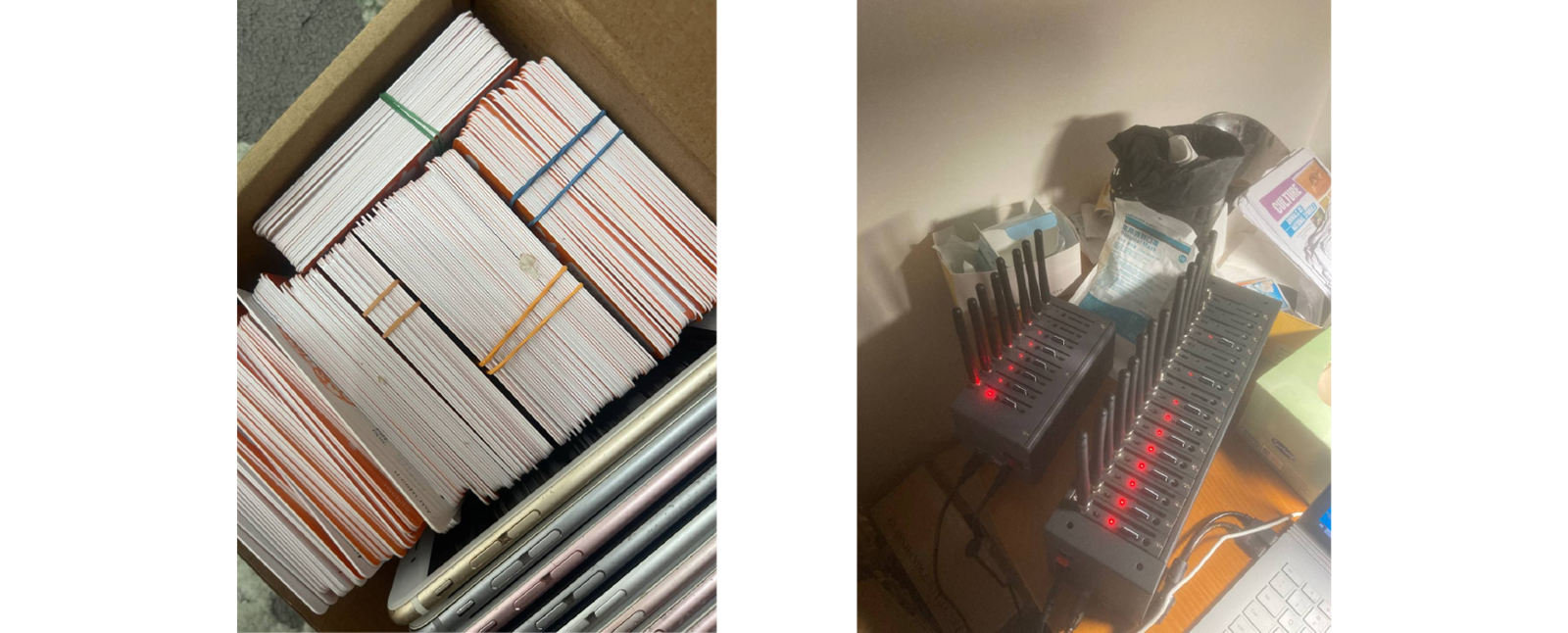

Here we saw users sharing photos of their operations. Inventories of phones, SIM cards, modems, servers, and racks of devices used to send messages to victims.

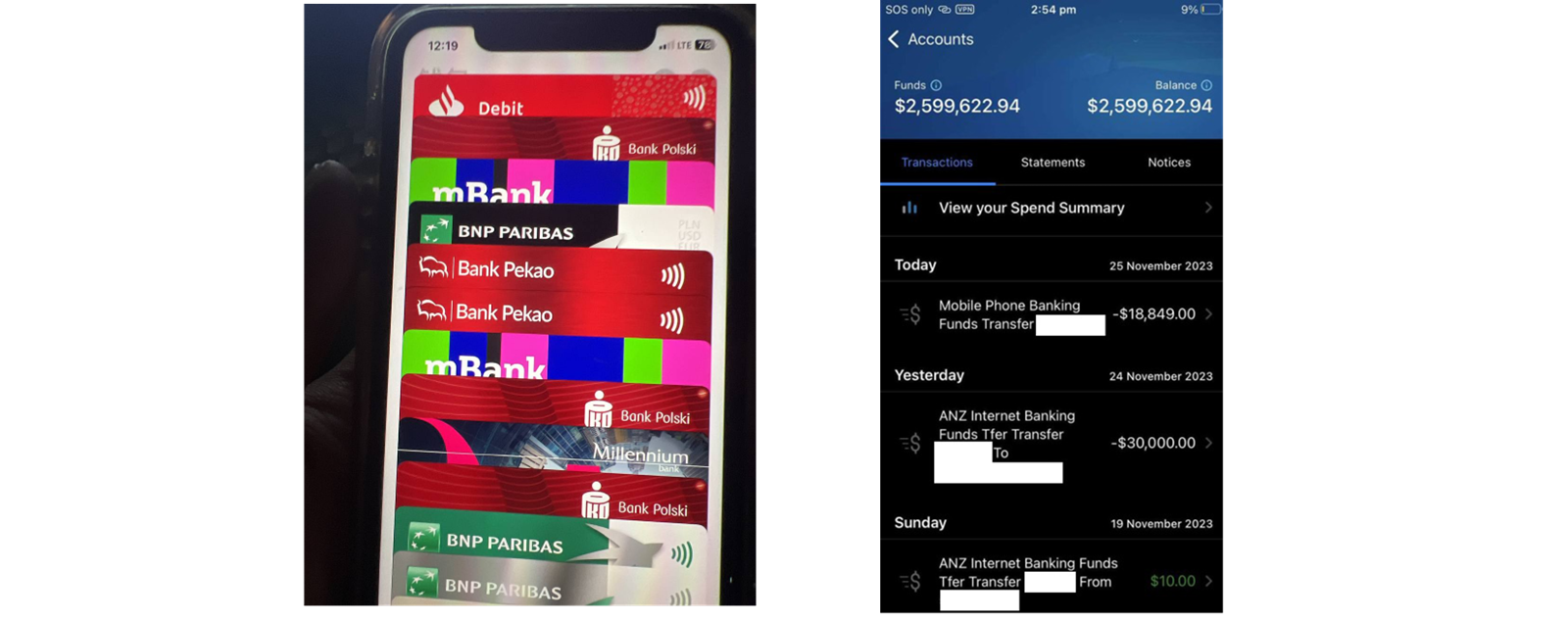

Operators also showed phones loaded with credit cards in Apple Pay, and payment terminals.

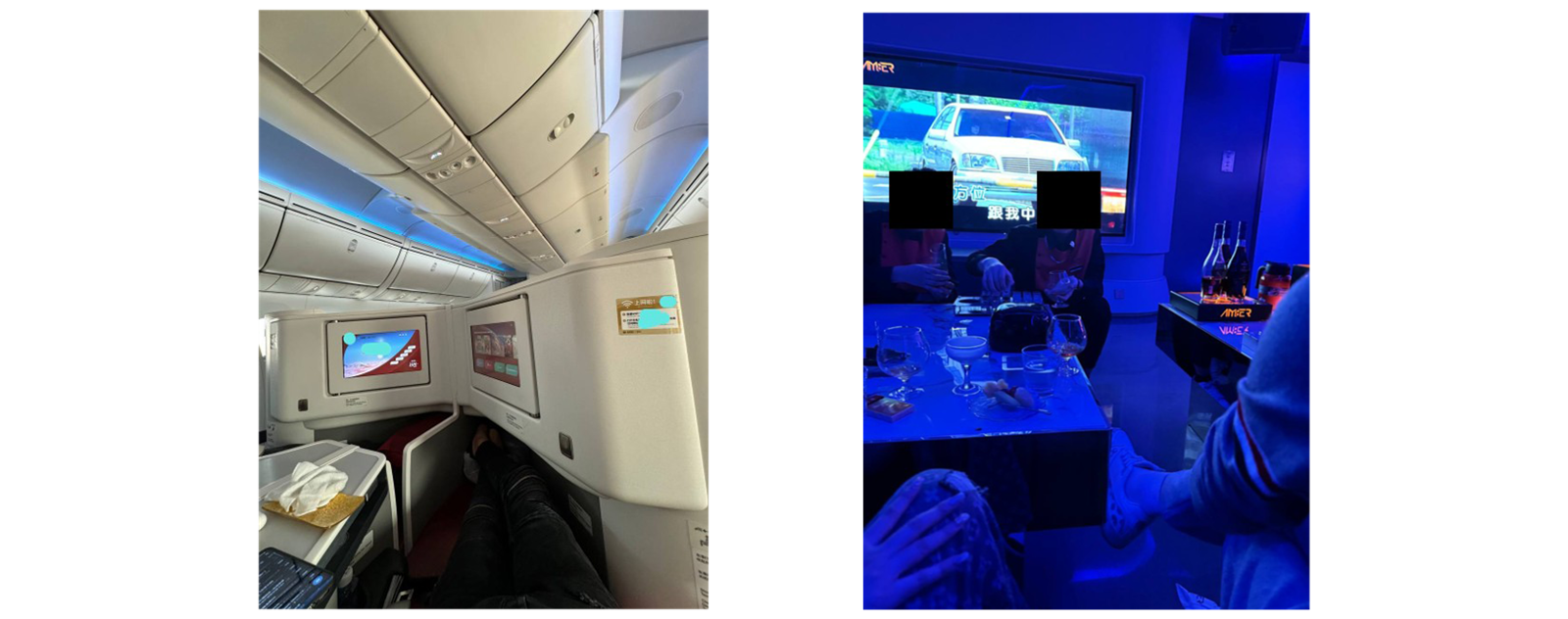

Users also shared images bragging about their success, showing bank accounts and the extravagant life their phishing scam enabled them to live.

Not only were the group members earning money, but they were spending it as well.

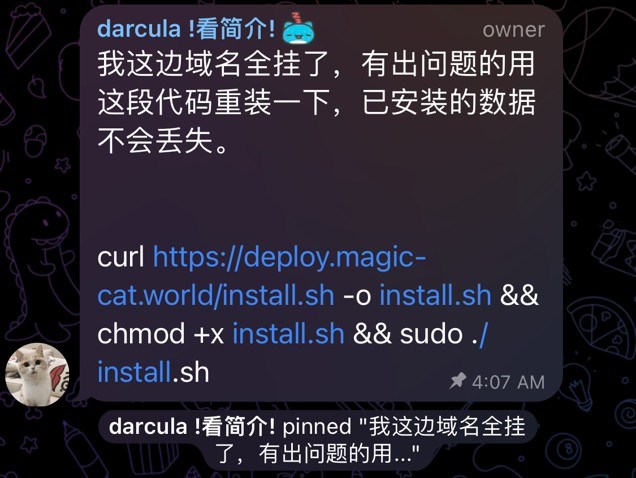

In the Telegram group we also found a pinned message with installation instructions for the phishing software itself.

We used a spare laptop, followed the instructions and minutes later, we had installed a copy of the same phishing software that had been used against us: Magic Cat.

Activating Magic Cat

It was very easy to get the software up and running. All we had to do was copy and paste that one simple command, and the phishing software was basically ready to go – minus the fact that our installation was unlicensed.

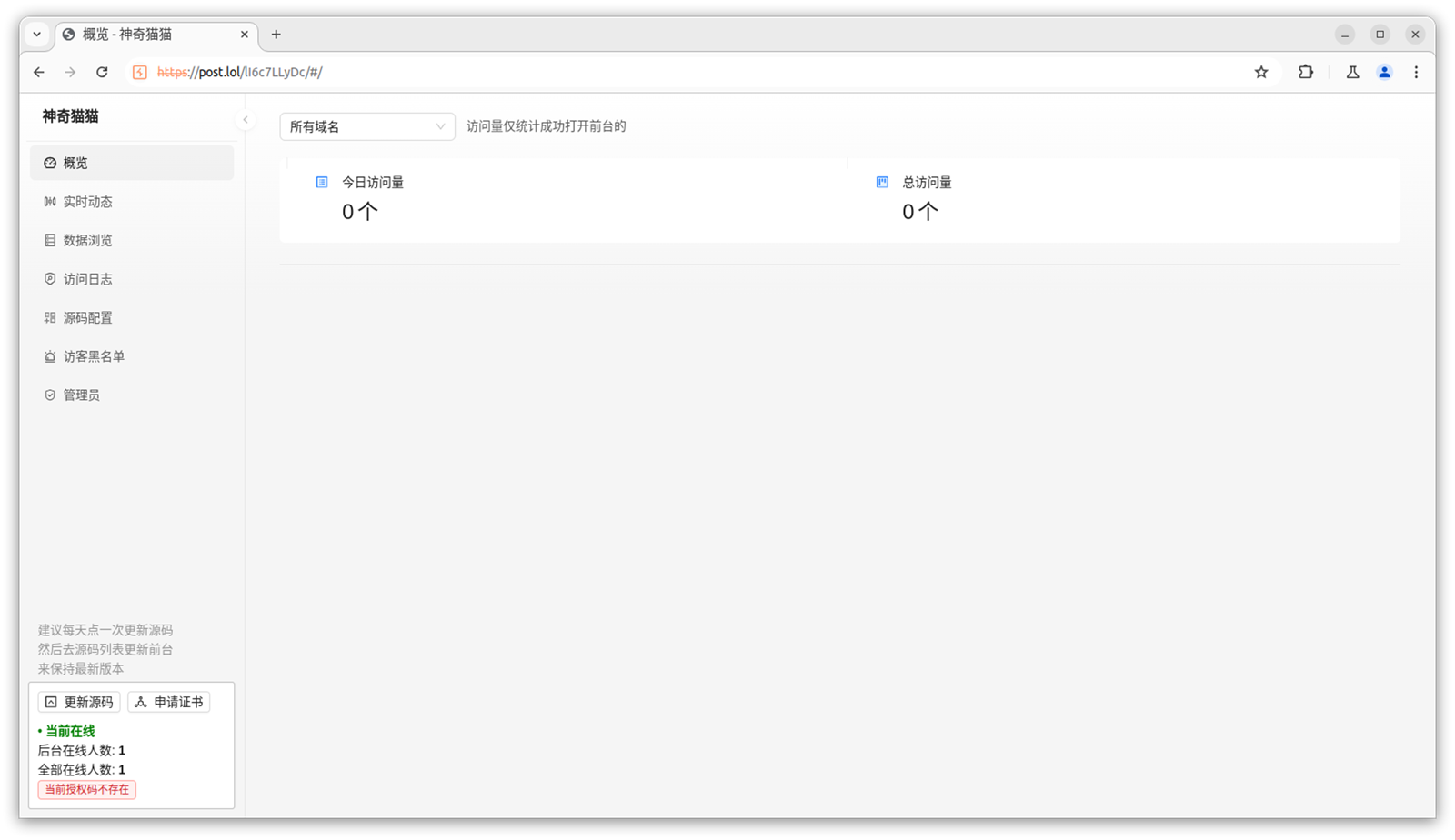

The screenshot below is the admin dashboard for the software we now know to be called Magic Cat. This was the hidden operator dashboard that the scammer would use to see all the stolen credit card data and interact with victims to request additional PIN-codes if required.

However, we still had one problem – our version was unlicensed, as indicated by the red message in the lower left of the dashboard. We would need to obtain a license key before we could test the software and create a phishing campaign.

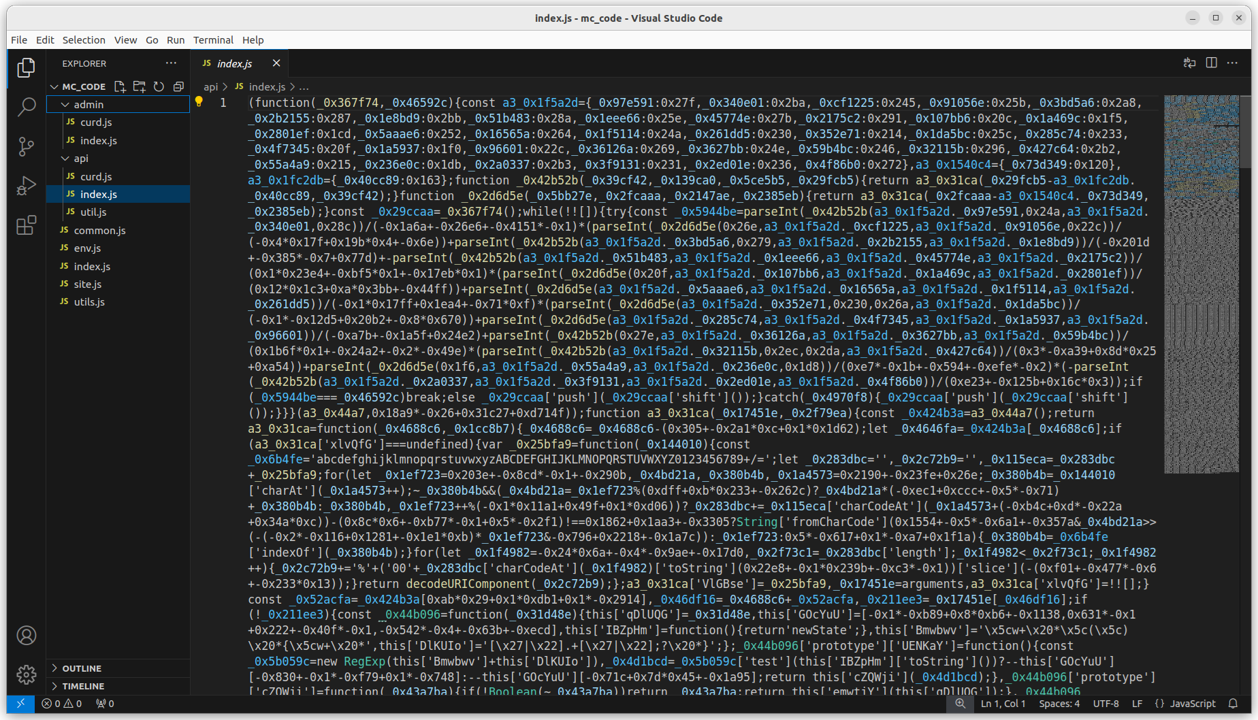

Curious as to what the software was capable of, we started poking around at the backend code. Unsurprisingly enough, this wasn’t exactly straight forward. Of course, the developer had obfuscated this as well.

This wasn’t the first time we’ve run into obfuscated Node.js. We were familiar with the open-source tool plainly named “JavaScript Obfuscator” that essentially takes normal code and spits out something that looks like the screenshot above - unintelligible muck. But there is another tool, also open-source, called “synchrony” that deobfuscates the output from JavaScript Obfuscator. What you get back is more or less readable code, minus the comments and original identifiers.

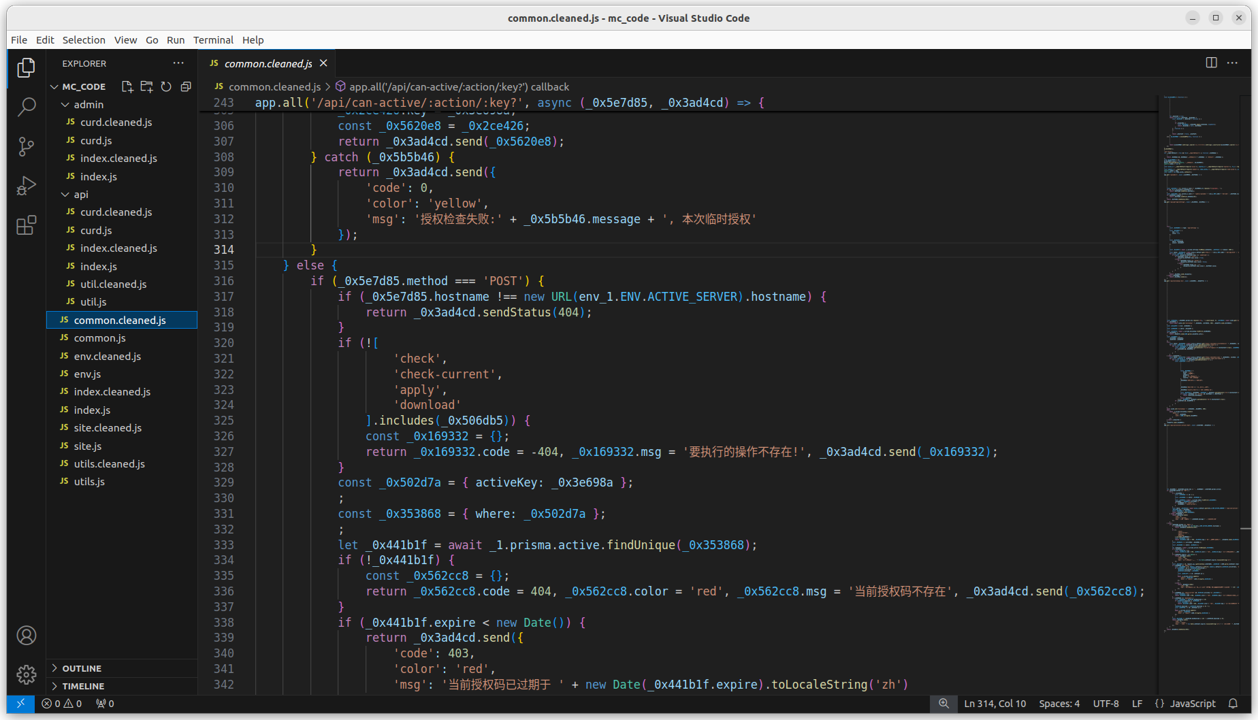

With readable backend code in hand, we were able to see how different aspects of the software worked. Somewhat surprisingly we saw functions related to the generation of activation keys. This wasn’t just code for consuming an activation key, it was actually a full-fledged activation server that could generate new activation keys, hiding inside every copy of the phishing software.

If the server was configured with a particular hostname, it would activate a hidden menu and enable functionality to generate activation keys. It was also capable of activating other copies of Magic Cat.

Activating this software required us to first generate a new license, add a comment to it, specify the licenses expiration date and the limit of phishing templates each license is eligible to download. These features were similar to enterprise software licensing function, allowing for license management and bookkeeping. Magic Cat also logged the IP address of all the phishing servers it received activation requests from.

Since we were running everything in a controlled lab environment, we were able to trick our copy of the software into thinking it was the real activation server, and activate our own copy of Magic Cat without purchasing a license.

The Magic Cat phishing platform

With the software running on our own laptop and with access to the source code, we were now able to investigate the phishing operators’ side of Magic Cat.

In poking around we came across plenty of interesting findings within the software. It’s feature rich, and clearly developed to enable non-technical buyers to conduct their own phishing campaigns at scale.

At the time, this included out-of-the-box support to impersonate a few hundred brands in countries around the world. Recent updates to the platform are reported to have made building custom brand templates even more user friendly for operators.

Magic Cat also streamed data entered by victims in real-time to the operators, allowing them to see character-by-character the data that was entered into the phishing sites. It allows operators to also request PIN codes in real-time, easily integrate towards SMS gateways, amongst many other features.

A backdoor?

When looking at the code of our copy of the phishing software, we noticed logic checking if a set of parameters from the HTTP request matched. If they did, it would skip the authorisation checks and one could perform queries without having to be logged in as a phishing operator.

This feature struck us as a backdoor, or it could be an oversight from the developer. And to this day we’re not entirely sure. It’s possible this was an oversight by the developer, and it was intended for some kind of poorly designed development environment. Or, if you want to put your black hat on and think a bit more dubiously, it would be a very good backdoor with plausible deniability built-in - in case anybody was to discover it in the future.

Moving forward

Our research started to reveal just how big and professional the operation was. The platform had regular software release cycles, new feature announcements, installation guides and support, and complete bookkeeping of those purchasing and using the software.

In January 2024 we compiled a report that detailed all our findings, and shared it with several law enforcement agencies.

Early on in the project we made a conscious decision to focus on the big picture. We did some research on smishing attacks early on, and most of the work up until then had been technical in nature, focusing on the latest software they used, or the newest techniques to send messages. We knew that the most likely outcome of repeating that type of work would be the group learning from their mistakes, and us driving them further underground.

To address and counteract the issue of smishing, phishing, and scams in general requires attention from different sections of society. Financial institutions, mobile network operators, big tech, law enforcement agencies, and the general public all have their part to play. For this reason, we decided to reach out to NRK, the Norwegian Broadcasting Corporation, to see if they would be interested in our discoveries.

We shared our findings up until that point, and continued our research. The answer to the biggest question remained unanswered. Who was Darcula?

Down the rabbit hole

Darcula maintained a relatively low profile. While other users flaunted their extravagant lifestyles in public Telegram groups, Darcula mostly stuck to mundane technical updates about Magic Cat and provided few leads for us to follow. At this point we were not even certain if Darcula was a person or a group. We continued to look and sifted through all the data we had related to the software ecosystem.

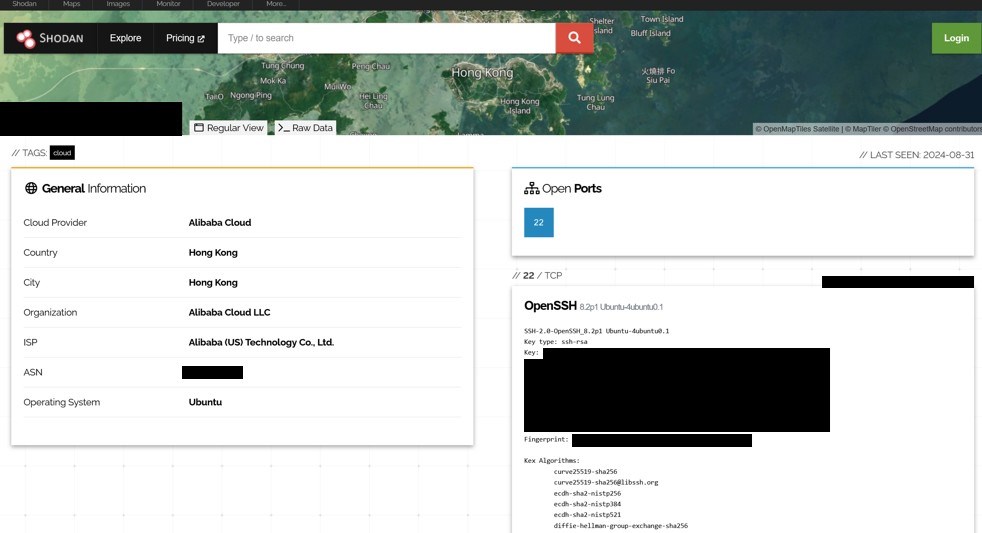

There was no shortage of dead ends. But eventually we came across an IP address that appeared to be connected to administrative functions. This might be interesting as it could very well belong to whoever was behind the Magic Cat software.

The IP was a virtual machine in Alibaba Cloud, most likely some kind of VPN. By itself, this didn’t mean much. It could be something that was custom built by Darcula, or it could be a shared VPN service used by hundreds or thousands of users. In any case, we kept following the lead.

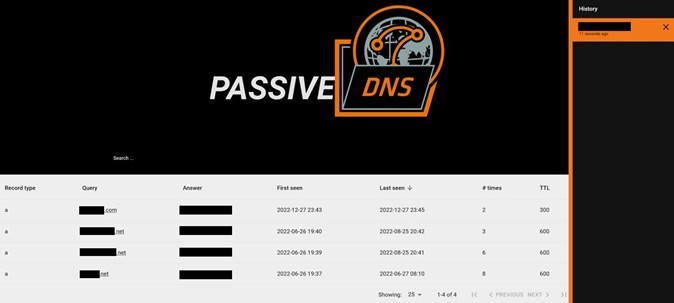

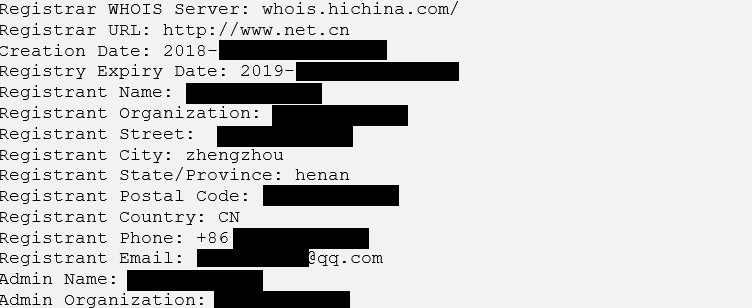

We wanted to see what this IP had been used for in the past. mnemonic maintains a Passive DNS service, which is essentially a historical record of what IP addresses were associated with what domain names. Using passive DNS records, we were able to see that a little over a year prior to our research, in December 2022, the same IP address was linked to a specific domain. Looking at historical DNS records, we could also see that this domain was linked to a GitHub username.

At this point, we deemed the reuse of an IP address to be a weak link. IP addresses frequently change hands. If a virtual machine in Alibaba Cloud was deleted, the IP it used could easily be given to another customer. But we kept going. The fact that GitHub repos contained software that was built using the same frameworks as Magic Cat gave us some indication that there might be a connection.

The commit history of these GitHub repositories revealed the user’s Gmail account name and gave us some base to look for more information that could reject or reinforce the theory that this was Darcula.

Throughout our research we used a service called OSINT Industries. OSINT stands for open source intelligence and is the act of using public information from the internet to produce actionable intelligence. The service that we used is a paid service that automates the process of checking to see which accounts are associated with a given email address or phone number. It also shows you any available public information about that account.

Imagine when you reset a password on a website and it says enter the phone number ending in XX so we can send you a code. If you did this on a bunch of websites, you could figure out which websites that email address was used on, and potentially parts of a phone number as well. OSINT Industries automates this process at a large scale.

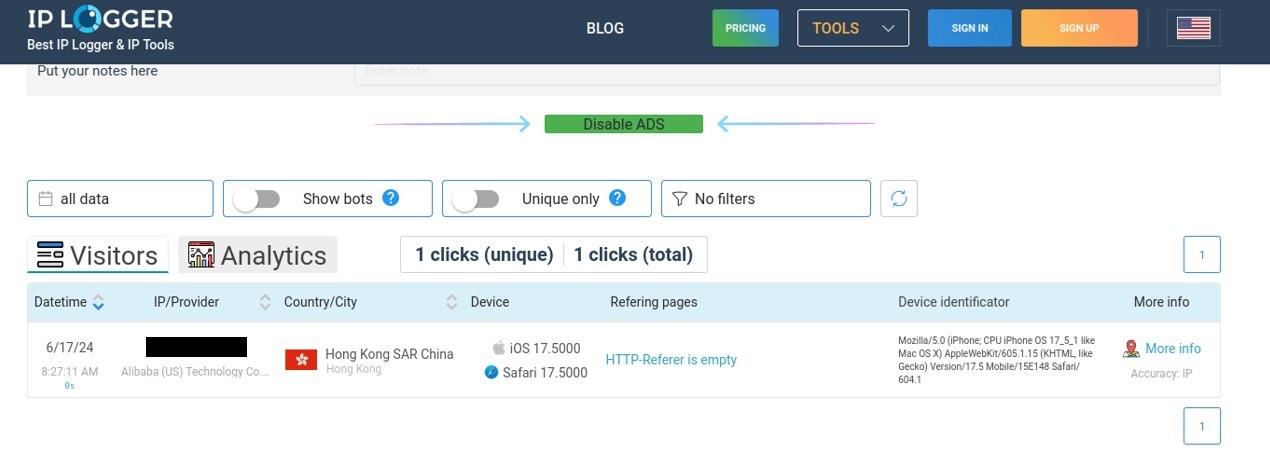

When we ran the Gmail account through OSINT Industries, we came across something interesting. Darcula’s Telegram profile had a US phone number (which we had previously assumed to be a burner number) that ended in 89. At the same time, the Gmail account was linked to an Apple account that also had a phone number ending in 89.

Was this a coincidence? The quickest way to disprove – or at best, strengthen – the belief that Darcula and our Github user was the same person would be to see whether the GitHub user was still using the same server for VPN. We decided to send an email to the associated Gmail address containing a link. If clicked, we would record their IP address.

And the link was clicked.

This confirmed that not only was the email address still active, but someone with access to this account clicked the link and the recorded IP addressed matched the one we had seen in Magic Cat. The connections to Darcula continued to pile on.

Even further down the rabbit hole

The OSINT Industries search had also uncovered a partial Chinese phone number associated with the Gmail account.

Proof of identity is required when obtaining a new Chinese phone number. This meant that if we were able to find Darcula’s full Chinese phone number, there would be a strong link to Darcula’s real identity.

Searching through archived copies of the domain we uncovered earlier, we were able to find another email address. This one was a QQ account, a popular Chinese messaging and email service. Combined with information we obtained from OSINT Industries on the Gmail account, and some manual OSINT work on the QQ account, we were able to uncover all but three digits of a Chinese phone number.

Interestingly enough there is no standardisation for what numbers are masked when a website tries to hide a phone number for account recovery purposes. If different websites hide different digits, you can overlay the results to narrow down the possibilities.

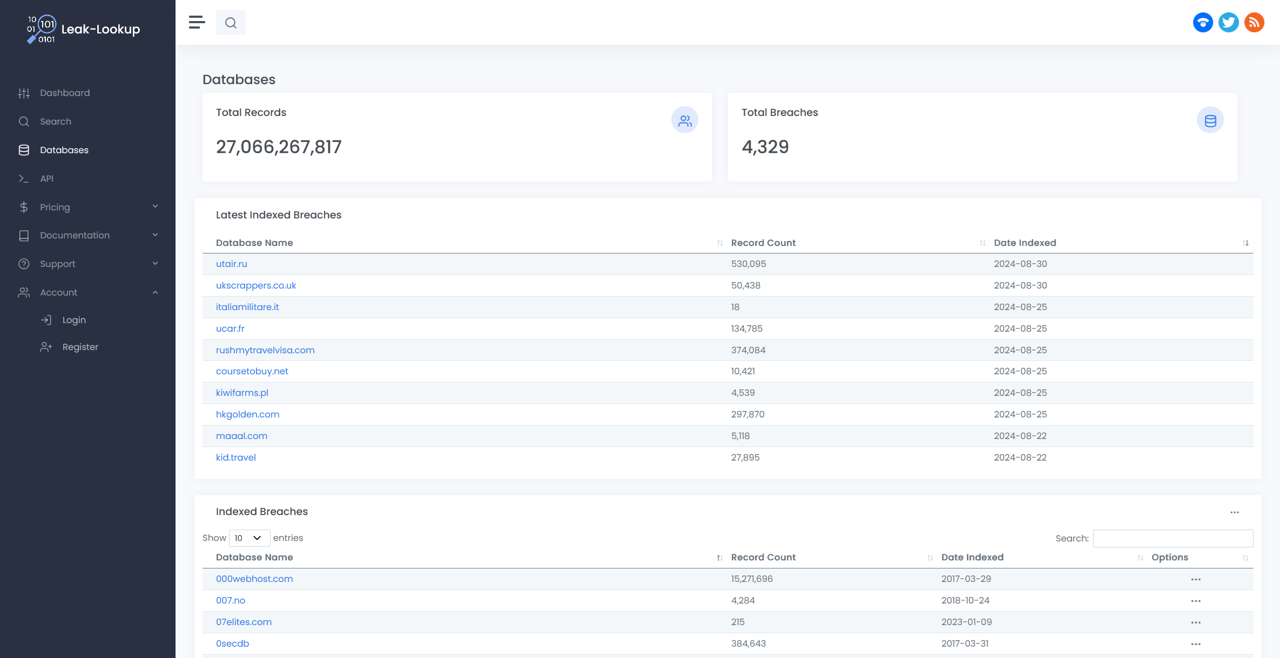

Three missing digits meant we were left with 1,000 possible phone numbers. We wanted to search OSINT Industries for the possible numbers, as it might be able to provide a reverse link to the QQ or Gmail account we already knew about. However, this would have been a bit expensive. What we opted to do instead was to search public leak/breach databases for all of the possible numbers, and then run the hits through OSINT Industries.

Our thinking was that not all phone numbers would have an online presence, and that we would be more likely to get a hit with numbers that had been found in public database leaks.

Our approach seemed to pay off when we found a phone number that linked back to an Instagram account sharing the same username as the Gmail account, and using an avatar that we had seen with the Gmail account.

Now that we had a likely phone number match, we decided to search historical WHOIS records (public website registration records) for websites that might have been registered with that number. Again, we got a hit. In this record there was a full name, phone number, and city.

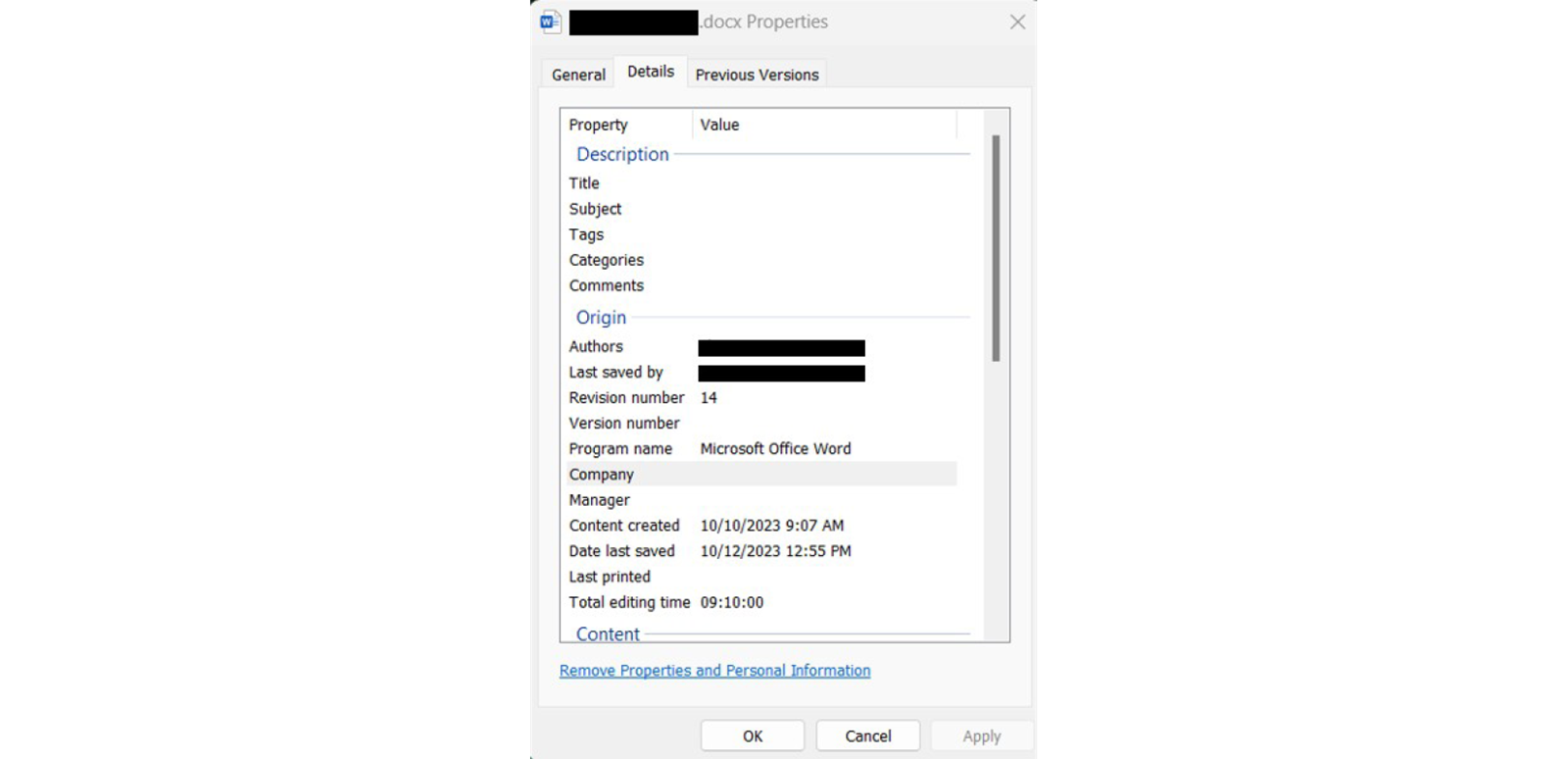

We had seen the name before, on Telegram. Darcula had uploaded multiple Word documents with instructions on how to install and use Magic Cat. In the document metadata, we saw the same name listed as the document author.

The story continues

When we started looking into this phishing kit, our initial motivation was sparked by the obvious lack of information on the ongoing phishing campaigns.

We wanted to find out more about who was behind this scamming operation and to understand how it worked. The result we hoped for, at the best, was to learn enough about the phishing campaigns to publish a post to inform our audience of how this works.

Our research uncovered hundreds of thousands of victims, thousands of licences bought to use Magic Cat, and a mature ecosystem enabeling the misuse of well-known brands from all over the world.

At this point we felt that our part in this story was nearing an end. The journalists at the Norwegian broadcaster NRK was able to take our findings, and through their investigative work uncover new aspects of what this world looks like, opening the door for discussions on what we can do as a society to stop this type of crime.

Read more about NRK's investigation here:

Want to get in touch with mnemonic about these findings? Please reach out to [email protected] or our researchers below.