Detecting advanced threats with Purple Team testing

Purple Team testing helps assess and improve detection and response to real threats, but how do you stop attackers who dodge all known detection methods?

Written by:

TL;DR

Why care: Current Purple Team testing approaches do not address advanced, in-memory tradecraft.

What’s new: mnemonic’s Advanced Cyber Operations campaign emulates threat intelligence-driven, stealthy attack techniques.

Key takeaway: Detect artifacts, test high-impact TTPs, and harden endpoints to block C2 agents.

Target audience: Detection engineers, Purple and Red Team operators, security practitioners.

Purple Team testing is a security testing concept that enables organisations to test and improve their ability to detect and respond to real-world cyber threats. This approach provides a baseline understanding of detection coverage against known threats, but how do you defend when attackers deliberately bypass both standard and custom detection?

Note: This blog post may require some familiarity with our Purple Team concept. This can be found in our previous two part blog series:

The challenge of simulating advanced threats in Purple Team tests

Since 2022, mnemonic has developed numerous Purple Team "campaigns." These campaigns are executed as security tests that group a collection of attack techniques used by threat actors within a certain level of sophistication. Here we will dive into how we evolved these tests to simulate advanced threat actor techniques, culminating in our Advanced Cyber Operations campaign framework.

To be effective, advanced Purple Team campaigns must move beyond simple, atomic tests or improvised attacks using standard Command-and-Control (C2) frameworks. They need to be:

- Threat intelligence-driven: Reflecting the tactics, techniques, and procedures (TTPs) and sophistication of relevant, real-world threat actors.

- Tactically sophisticated: Simulating advanced execution of complex procedures, not just isolated commands.

mnemonic’s Red Team are quite familiar with simulating advanced threat actors in the context of Red Team and TIBER tests. In this section however, we will discuss challenges related to simulating advanced threat actors in the context of Purple Team testing.

The missing “Advanced” level

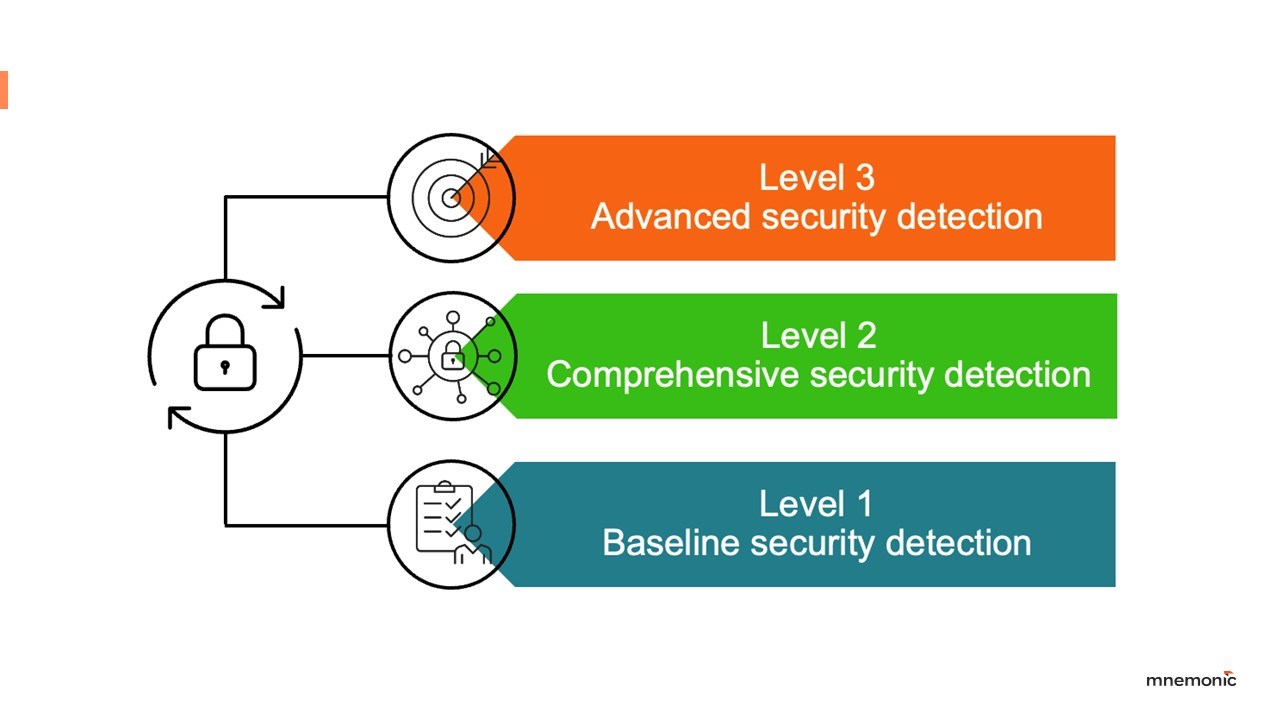

Since we established our three‑tier Purple Team model in 2022 (see figure below), many clients have performed both Baseline and Comprehensive Purple Team campaigns with us. The Baseline level tests are used to validate that the organisation has an essential level of threat detection, while the Comprehensive level challenges the default detection an organisation gets with defensive tools like Endpoint Detection and Response (EDR).

A few clients have inquired about the promised Advanced tier, prompting us to revisit what 'advanced' should truly include. Initially, this tier was even referred to as 'Custom,' reflecting the uncertainty around its scope beyond some preliminary concepts.

Purple Team testing at the Baseline and Comprehensive levels evaluate a mixture of vendor‑supplied detection rules on endpoints, network sensors and detection from identity platforms, plus whatever custom detections security engineers have crafted. Against commodity malware and well‑known TTPs, testing these campaigns usually leads to great improvements in detection coverage. However, incident reports show that advanced threat actors such as Nation-State Groups tend to shy away from commodity malware, and rather develop custom tooling and tradecraft for their operations. When the techniques these groups use deliberately subvert known detection mechanisms, defenders must increase the same capability on the detection side.

Defining "Advanced" Purple Team testing

When developing our Advanced level for Purple Team testing, we examined both our own and common industry approaches:

- Atomic tests: Executing single, isolated ATT&CK procedures (e.g., a specific registry key change or mimikatz.exe). While useful for baseline checks, they don't reflect the adaptive nature of real intrusions.

- Scripted playbooks: Automating parts of known threat actor playbooks (like MITRE's Adversary Emulation Plans). These can be valuable, but often rigid and may not accurately reflect an actor's behaviour in a different environment. They also require significant effort to adapt per threat actor group.

- Improvised C2 attacks: Using standard C2 frameworks (like Cobalt Strike, Metasploit) and manually executing commands. This can be unstructured, difficult to repeat consistently, heavily reliant on the individual tester’s skills, the target environment, and is less focused on specific, measurable detection gaps.

All three approaches have significant drawbacks that conflict with the core principles we established for our advanced campaigns. In the section below, we dive into why each of the abovementioned options is less than ideal.

Option A: Atomic tests AKA “one-liners”

Implementing Purple Team campaigns as atomic tests ("one-liners") is a straightforward approach. In fact, nearly all our Purple Team campaigns at the Baseline and Comprehensive level are implemented using atomic tests. This is because we are adequately able to match the sophistication of the threat actor level we are operating within.

Initially, we explored creating an "Advanced" tier simply by increasing the technical complexity of these one-liners. However, this proved insufficient. We recognised that isolated commands, no matter how technically sophisticated, cannot realistically emulate the multi-stage, adaptive operational flow of a group like for example Russia's APT29.

A great example to showcase this discrepancy is by looking at credential dumping from LSASS (T1003.001). While detecting mimikatz.exe is a start, it only verifies detection for one specific implementation of credential dumping. A positive detection here can create a false sense of security. Sophisticated attackers rarely use off-the-shelf tools in their most basic form.

Consider different implementations of the same T1003.001 technique, with our rough evaluation of detection difficulty based on more than 50 Purple Team campaigns executed in different organisations.

| Campaign Level | Example Procedure | Detection Difficulty | Notes |

| Baseline | mimikatz.exe executed from disk | Easy | Nearly always detected with basic AV/EDR signatures and command-line logging. |

| Comprehensive | Open-source, custom, not-signatured LSASS dumping tool executed from disk (e.g. Rustivedump) | Medium | Bypasses simple signatures; may require behaviour-based rules |

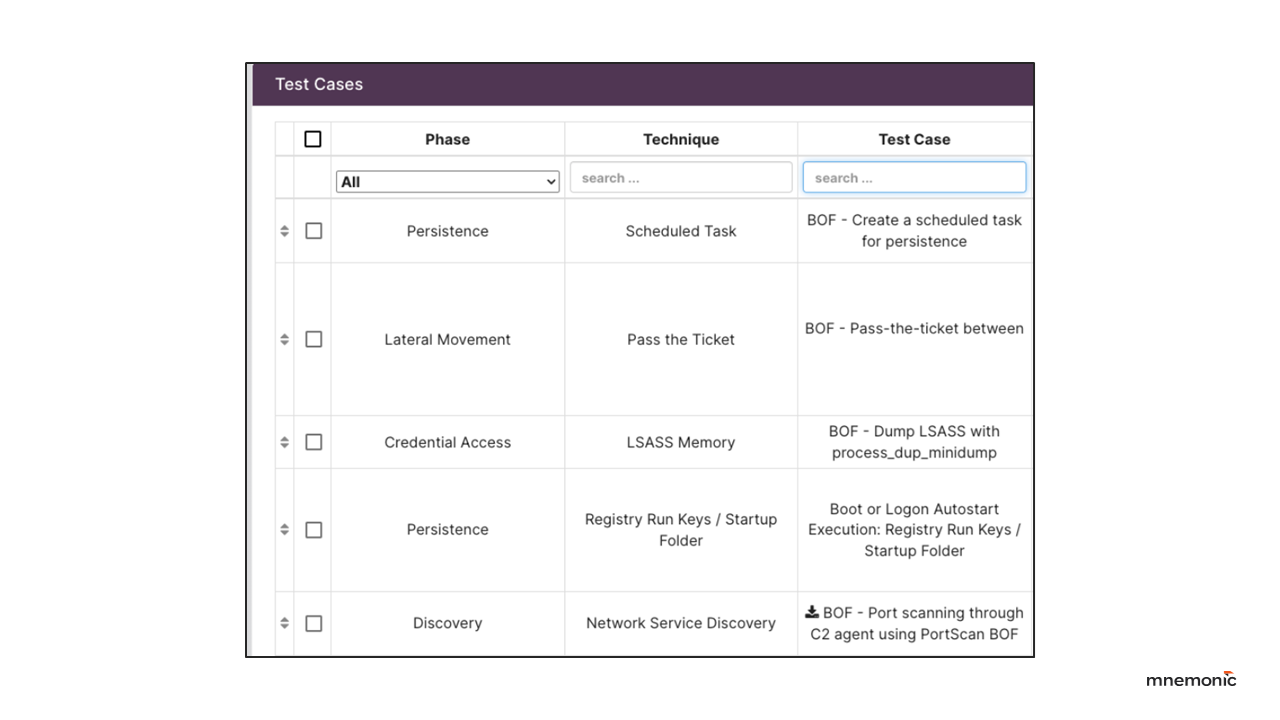

| Advanced | Custom credential dumper loaded via Beacon Object Files (BOF) | Hard | Memory-only execution, minimal artifacts, avoids common EDR hooks. |

As this example shows, simply checking off a TTP provides an incomplete picture. Real adversaries adapt, using numerous variations (procedures) for each technique. Relying solely on detecting known procedures leaves a gap when addressing advanced threat actors who develop custom procedures for the same technique. This highlights why detection engineering increasingly focuses on identifying underlying behaviours rather than specific command-line strings or file hashes. Consequently, while the technique is the same, the sophistication in how the procedure is implemented and executed wildly differs between the levels.

Option B: Running pre-defined threat actor playbooks

You can find Adversary Emulation Plans from MITRE to emulate parts of attacks by specific threat actors. We found these too constraining for our concept. They required a lot of preparation of infrastructure, manual testing and required specific software to be present in the target environment. While they offered a high level of sophistication and wow factor, they lacked practicality, driving up delivery costs and ultimately limiting their accessibility to a broader set of customers.

We also found they only allowed us to simulate one specific threat actor at a time. Simulating a specific group is great if you have threat intelligence to support a suspicion of a specific threat actor group attacking the organisation. For many of our customers, this is not always the case. It is often unclear which single actor to practice defending against without investing more into threat intelligence to support that choice.

Ultimately, we want all of our Purple Team campaigns, even the advanced ones, to be relevant for more than one organisation. We also want them to be repeatable and not bound to exactly what technology configuration or security posture the organisation has.

Option C: Spin up a C2 and improvise an attack

We also experimented with using standard C2 frameworks to improvise attacks during Purple Team tests. This approach proved difficult to manage, often leading to unstructured improvisation where testers could easily stray into “rabbit holes” instead of focusing on testing the intended techniques of the campaign. Our goal is the accurate, repeatable simulation of specific, planned attack techniques, not an ad-hoc simulation of common penetration testing methods. This lack of structure and reproducibility makes improvised attacks unsuitable for achieving the defined goals of our Purple Team campaigns at any level.

Introducing Advanced Cyber Operations

To answer to the above challenges, we designed and implemented our first advanced Purple Team campaign. We called it the Advanced Cyber Operations framework, and developed it in collaboration with our Red Team and Threat Intelligence (TI) groups. The Advanced Cyber Operations framework is built on two core principles:

- Threat intelligence-driven: These campaigns simulate techniques confirmed to be used by specific threat actor groups targeting relevant regions or sectors. This ensures the tested TTPs are relevant and realistic.

- Stealthy execution: Techniques are launched using methods that mimic advanced adversaries, primarily leveraging Beacon Object Files (BOFs) executed via C2 channels to minimise detection footprint.

Below, we highlight how we implemented the campaign according to these principles and what trade-offs we had to make.

Threat intelligence for ACO

The primary source of threat intelligence for this campaign is public threat reports from security vendors and government cybersecurity agencies, alongside private intelligence feeds to identify the most relevant threat actors to target Nordic organisations.

With this, we designed a campaign that simulates techniques used in recent years by various threat actor groups in the categories of Nation-State Group, Organised Crime Group, and Insider. According to our threat intelligence, these categories are all likely to target Nordic organisations in 2025

Stealthy execution for ACO

The stealthiest option for Red Team post-exploitation tooling today is arguably Beacon Object Files (BOFs). In short, BOFs allow an operator (Red Team or threat actor) to execute arbitrary code in the memory of a Command and Control C2 agent with a minimal footprint. It’s not clear whether threat actors use BOFs in the same way Red Teams and penetration testers do, but we know from incidents we have handled that threat actors possess the same or similar memory-only, zero artifact capabilities.

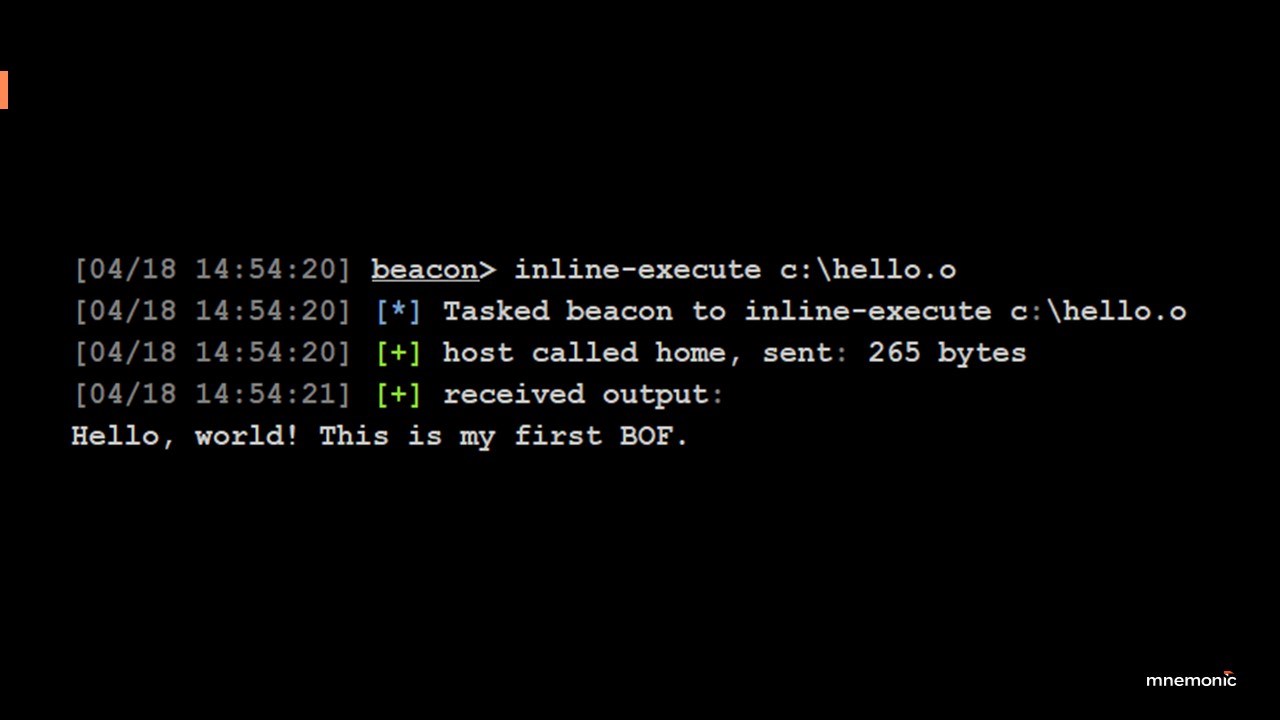

A primer on Beacon Object Files (BOF)

To understand Advanced Cyber Operations, a rudimentary understanding of the concept of BOFs is required.

Most threat actors operate with (C2) frameworks as a way to remotely control computer systems in a target environment. A BOF is a compiled C program that executes within a C2 agent’s process. In this context, this means the threat actor can execute arbitrary C programs in the C2 agent running in the memory of the target device.

BOFs essentially provide a stealthy mechanism to execute arbitrary attacker programs that support their operational goals, which can include any known attacker technique in the MITRE ATT&CK Matrix. Compared to something like a PowerShell script or an EXE file on disk, or even running .NET code in memory, BOFs provide an even higher level of stealth. As BOFs run only in memory, there are no command line logs from their execution, all operations happen directly through native Windows API calls, and they rarely leave any artifacts on the target system. In essence, BOFs are the stealthiest mechanism Red Teams currently have for executing simulated attacker techniques in a similar fashion to how the most advanced threat actor groups do.

We will release a more detailed blog post on BOFs in the near future, explaining more about their scope and inner workings. For this part however, you only need to know that they are:

- Single task implementations of an attack technique

- Run in the same memory space as a C2 agent

- Have a minimal footprint, often leaving no artifacts

ACO implementation

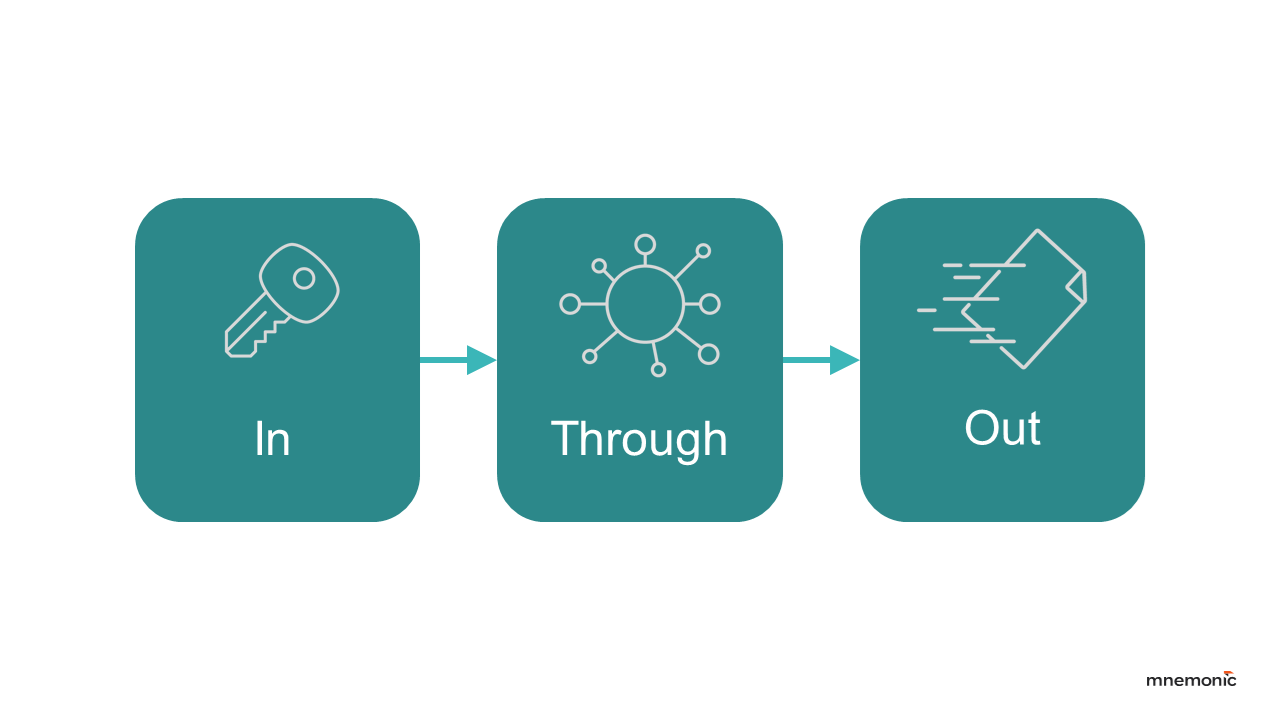

With a few core principles well established, we implemented the campaign. The ACO campaign is three-fold and follows the simplified cyber kill-chain:

- IN (Initial Access & C2): Testing defences against various payload delivery mechanisms (e.g., XLL, DLL, VBS) and C2 channel establishment over different protocols (HTTPS, DNS).

- THROUGH (Post-Exploitation): Executing BOFs via the established C2 channel to simulate persistence, credential access, privilege escalation, discovery, lateral movement, etc.

- OUT (Actions on Objectives): Simulating data collection, staging, exfiltration, and demonstrating potential impact.

This structured approach ensures comprehensive testing across different attack phases using advanced, stealthy methods. The techniques included are also purposefully selected to create entries from multiple log sources, including endpoint, network and identity. To simulate the behaviour of the chosen threat actors realistically, we execute the BOFs through a C2 agent when possible.

Detecting advanced threats

A key challenge with ACO was enabling the Blue Team to detect, or identify the events in log sources and create detection for it. Implementing advanced, stealthy techniques is easy compared to providing clear detection guidance, especially when detection is inherently difficult.

From a Red Team perspective, the appeal of tools like Beacon Object Files (BOFs) is clear: they offer stealth and operational flexibility. The availability of public BOFs on GitHub (like those from illustrates their popularity in the offensive community and strongly suggests threat actors possess similar capabilities. Our own initial exploration into detecting attack techniques implemented as BOFs revealed significant limitations in standard EDR telemetry and existing detection logic. Many procedures simply did not generate any telemetry artifacts needed for reliable detection.

Recognizing this gap, we tried to find external research addressing detection strategies for BOF-implemented attack techniques and their execution. This led us to a detailed blog post from Elastic Security Labs "Detonating Beacons to Illuminate Detection Gaps" (Feb 2025). It is one of few public analyses diving into detection possibilities specifically for BOF-based execution of attack techniques. Following discussions with the Elastic team, they kindly shared code they wrote for BOF parsing and analysis, which enabled us to test and develop potential detection methods for a lot of publicly available BOFs.

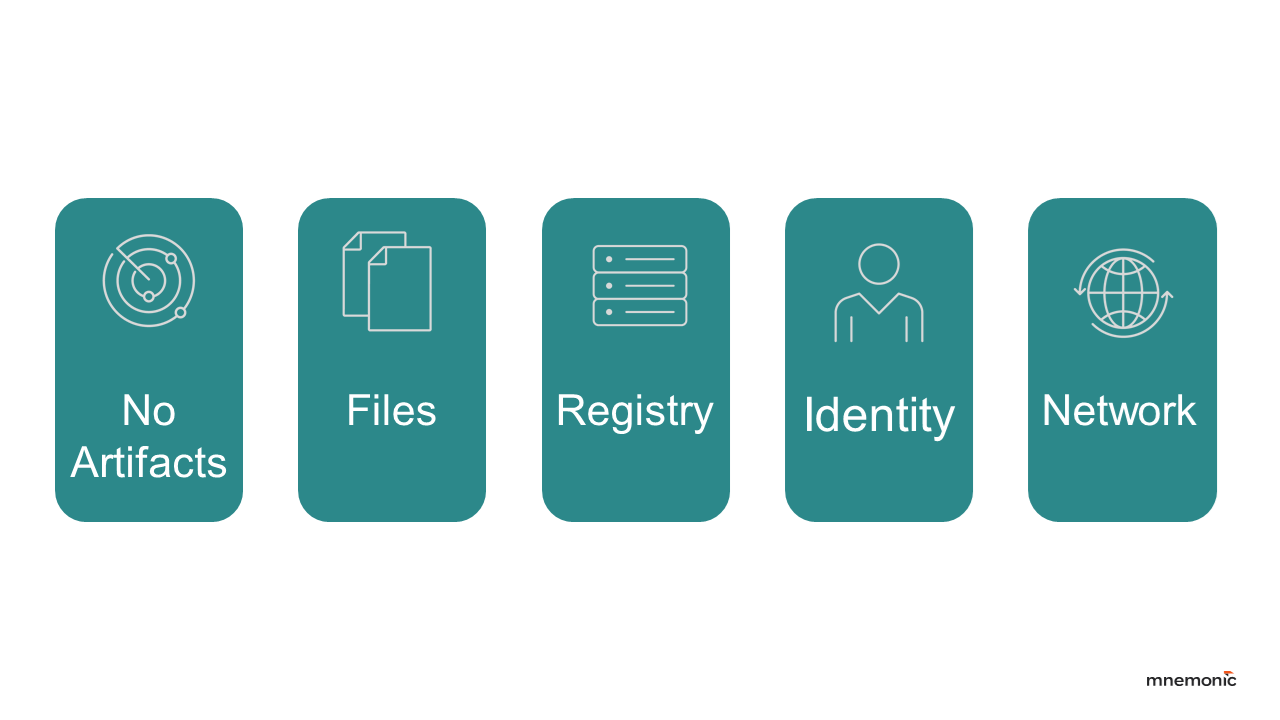

Our research led us to find that while generic BOF execution itself is hard to detect reliably in memory, the actions performed by the BOF often leave traces. We categorize these potential detection opportunities based on artifacts:

- No artifacts: The BOF performs actions primarily using API calls that might be monitored by an EDR but leave no persistent changes (e.g., listing processes or network connections). Detection relies heavily on sophisticated EDR behavioral analysis or memory introspection, which is challenging.

- Files: The technique reads, writes, modifies, or deletes files. Detectable via file integrity monitoring (FIM) or EDR file operations logging, which can be difficult to scale due to the vast number of file events.

- Registry: The technique modifies the Windows Registry. Detectable via registry monitoring.

- Identity: The technique creates/modifies local users/groups or interacts with authentication protocols (e.g., Kerberos ticket requests). Detectable via Windows event logs and identity security solutions.

- Network: The BOF initiates specific network connections or uses unusual protocols. Detectable via network sensors or EDR network monitoring.

High-value attacker operations, such as credential dumping or establishing persistence, typically require direct interaction with the system and tend to leave some artifacts, even if the BOF execution itself is stealthy. In contrast, low-value actions like basic reconnaissance often blend into normal system activity and are less likely to be detected. Our analysis quickly showed that a technique must deliver real benefit to an adversary to justify its use: low-value tasks, for example enumerating Kerberos tickets on an endpoint, stay close to baseline behaviour and therefore pose little risk of detection, but high-value tasks like credential dumping diverge sharply from normal procedures, generate more indicators, and as a result carry a much higher likelihood of detection.

Key takeaways for defenders

Advanced Purple Team test campaigns like Advanced Cyber Operations demonstrate that achieving 100% detection coverage against sophisticated, stealthy techniques is unrealistic. The focus must shift towards maximising visibility and resilience where it matters most. Based on our findings, here are the key actionable takeaways for security teams, particularly those managing complex environments:

Detect artifacts from technique execution

Prioritise detecting the effects or artifacts of advanced techniques, specifically changes to files, registry keys, identities, network traffic patterns or Active Directory log entries. Detecting the stealthy execution mechanism itself (like in-memory BOF execution) is often unreliable with current mainstream tools.

Validate detection for high-impact attack techniques

Ensure robust detection and prevention capabilities specifically for high-impact attacker objectives like credential dumping (T1003). Leverage granular endpoint logging, advanced EDR behavioural rules, and platform security features (like Windows Credential Guard and LSASS Protection) to force attackers to perform actions where you have reliable detection.

Document detection gaps

Identify and formally document techniques (especially low-artifact reconnaissance or execution methods) where reliable detection is currently not feasible. Use this knowledge to prioritise compensating controls (like network segmentation or access control), and guide future security investments, rather than chasing detections for low-impact TTPs.

Prevent and detect C2 agent execution

Before executing advanced attack techniques through a mechanism like BOF, attackers must first deploy a C2 agent. This means running an initial malicious payload in your environment, a step defenders cannot afford to ignore. Our research shows that if an attacker successfully runs their C2 agent undetected, they have already bypassed a key defense. This early success makes it significantly harder to detect their subsequent actions. Therefore, preventing or detecting the initial C2 establishment remains absolutely critical.

Conclusion

Advanced threat simulations expose a hard truth: skilled attackers still evade detection. Our intelligence-led Purple Team exercises, like Advanced Cyber Operations, help organisations confront this by validating defenses, identifying gaps, and focusing on artifact-based detection. Knowing what you can and can't detect empowers smarter investments and sharper incident response. Continuous, realistic testing is key to staying ahead.

Advanced Cyber Operations is our first full campaign at the Advanced Purple Team level. It will evolve with threat-actor tradecraft, focusing on techniques with viable, but challenging detection paths. Rather than showcasing undetectable methods, we prioritise realistic detection testing. We’re also expanding into cloud platforms and sector-specific campaigns. Stay tuned.